The probability of the original input list being in the exact order it’s in is 1/(n!). There is such a small likelihood of this that it’s clearly absurd to say that this happened by chance, so it must have been consciously put in that order by an intelligent Sorter. Therefore it’s safe to assume that it’s already optimally Sorted in some way that transcends our naïve mortal understanding of “ascending order”.

Tag: cs

Computational Complexity and the Anthropic Principle

a fun talk loosely based on the paper “NP-completeness and physical reality”, which i thoroughly enjoyed.

Complexity Zoo

huh. when i was in school we pretty much stopped at P and NP, now there are 545 complexity classes.

Scott Aaronson

I have therefore reached a decision. From this day forward, my allegiances in the String Wars will be open for sale to the highest bidder. Like a cynical arms merchant, I will offer my computational-complexity and humor services to both sides, and publicly espouse the views of whichever side seems more interested in buying them at the moment. Fly me to an exotic enough location, put me up in a swank enough hotel, and the number of spacetime dimensions can be anything you want it to be: 4, 10, 11, or even 172.9+3πi.

i too, am a scott aaronson fan. his NP-completeness and physical reality is the snarkiest physics paper i have ever read. every now and then scott writes a paper that is a bit more widely accessible, like NP-complete Problems and Physical Reality. And now this one…

https://arxiv.org/abs/1108.1791

all i ever wanted to know about the intersection of quantum computing, cosmology, complexity theory etc

Rendering Effective Route Maps

this is a classic paper for routing: exaggerate the details to make them stand out, and compress long stretches.

Route maps, which depict a path from one location to another, have emerged as one of the most popular applications on the Web. Current computer-generated route maps, however, are often very difficult to use. In this paper we present a set of cartographic generalization techniques specifically designed to improve the usability of route maps. Our generalization techniques are based both on cognitive psychology research studying how route maps are used and on an analysis of the generalizations commonly found in handdrawn route maps. We describe algorithmic implementations of these generalization techniques within LineDrive, a real-time system for automatically designing and rendering route maps. Feedback from over 2200 users indicates that almost all believe LineDrive maps are preferable to using standard computer-generated route maps alone.

Future of computer science

Programs are getting re-shaped everywhere, and in many cases this is causing a real identity crisis. Which is it: computational biology, or bioinformatics? The fear at first was that computer science would become the servant of all the other disciplines; however, what some schools are seeing is that in the cross-discipline research efforts, often the computer scientists are emerging as the lead researchers on the projects. Things are going to look very different in 5-10 years. I honestly can’t tell you what a CS department will look like. But the very good news here is that computer science is alive and well, and in fact there is an energy and enthusiasm in the field that I have not felt for a long time, and a recognition that computing will have a huge impact on the world in almost every field of endeavor.

paul graham expresses the same notion in his typical eloquent style:

I’ve never liked the term “computer science.” The main reason I don’t like it is that there’s no such thing. Computer science is a grab bag of tenuously related areas thrown together by an accident of history, like Yugoslavia. At one end you have people who are really mathematicians, but call what they’re doing computer science so they can get DARPA grants. In the middle you have people working on something like the natural history of computers– studying the behavior of algorithms for routing data through networks, for example. And then at the other extreme you have the hackers, who are trying to write interesting software, and for whom computers are just a medium of expression, as concrete is for architects or paint for painters. It’s as if mathematicians, physicists, and architects all had to be in the same department.

when i started my studies in 1997, freshly minted graduates would mostly end up in incredibly dull bean counter roles at various financial institutions. luckily for me, the internet shifted the focus away from computation to communication. if kevins and pauls observations are correct, the hard (and interesting) problems outside of computer science are now coming into view. exciting times.

Digital Philosophy

i’m still reading a new kind of science. one concept that wolfram advocates is that of finite nature.

The basic idea is that of ‘Finite Nature’. There is some discussion of the exact meaning of this phrase; my preferred definition is, the proposition that a finite quantity of space/time, containing a finite amount of matter/energy, is capable (in principle) of being simulated EXACTLY using a finite amount of computing power on a Universal Turing Machine.

The implications of the Finite Nature concept are pretty far-reaching. Among other things, any physical principle that is presently theorized to be based on continuous functions of any kind, must be supposed to actually be an approximation of an underlying computational process. This reverses the normal relationship, where digital processes (such as CA’s, or generally any quantized matrix calculations such as those used in computational dynamics) are seen as approximations of an underlying, continuous reality.

although wolfram has his detractors, his book is highly inspirational. he certainly has done much to publicize the field of digital philosophy.

Quantum Computing

It is becoming increasingly clear that, if a useful device for quantum computation will ever be built, it will be embodied by a classical computing machine with control over a truly quantum subsystem, this apparatus performing a mixture of classical and quantum computation. This paper investigates a possible approach to the problem of programming such machines: a template high level quantum language is presented which complements a generic general purpose classical language with a set of quantum primitives.

A very interesting paper, basically stating that any quantum computer will need a classical front end to deal with data pre- and post processing. even the very pragmatic distinction between call-by-value and call-by-reference needs to be rethought:

It is well known that the no-cloning theorem excludes the possibility of replicating the state of a generic quantum system. Since the call-by-value paradigm is based on the copy primitive, this means that quantum programming can not use call-by-value; therefore a mechanism for addressing parts of already allocated quantum data must be supplied by the language.

2007-02-12: D-Wave 16 qubit prototype, with hopes for a 1024 qubit system in late 2008. funny: they are not sure if it is a quantum computer at all, it might be an analog computer.

2007-04-22: the quantum computation version of the stacked turtle

But it was still pretty exciting stuff. Holy Zarquon, they said to one another, an infinitely powerful computer? It was like a 1000 Christmases rolled into 1. Program going to loop forever? You knew for a fact: this thing could execute an infinite loop in less than 10 seconds. Brute force primality testing of every single integer in existence? Easy. Pi to the last digit? Piece of cake. Halting Problem? Sa-holved.

They hadn’t announced it yet. They’d been programming. Obviously they hadn’t built it just to see if they could. They had had plans. In some cases they had even had code ready and waiting to be executed. One such program was Diane’s. It was a universe simulator. She had started out with a simulated Big Bang and run the thing forwards in time by 13.6b years, to just before the present day, watching the universe develop at every stage – taking brief notes, but knowing full well there would be plenty of time to run it again later, and mostly just admiring the miracle of creation.

2007-08-30: What Google Won’t Find

For “generic” problems of finding a needle in a haystack, most of us believe that quantum computers will give at most a polynomial advantage over classical ones.

2011-01-20: 10b qubits is very significant. i am sure there are all sorts of caveats, but still: wow

2011-10-04: Philosophy and Theoretical Computer Science class by Scott Aaronson.

This new offering will examine the relevance of modern theoretical computer science to traditional questions in philosophy, and conversely, what philosophy can contribute to theoretical computer science. Topics include: the status of the Church-Turing Thesis and its modern polynomial-time variants; quantum computing and the interpretation of quantum mechanics; complexity aspects of the strong-AI and free-will debates; complexity aspects of Darwinian evolution; the claim that “computation is physical”; the analog/digital distinction in computer science and physics; Kolmogorov complexity and the foundations of probability; computational learning theory and the problem of induction; bounded rationality and common knowledge; new notions of proof (probabilistic, interactive, zero-knowledge, quantum) and the nature of mathematical knowledge. Intended for graduate students and advanced undergraduates in computer science, philosophy, mathematics, and physics. Participation and discussion are an essential part of the course.

2013-04-13: Quantum computing since Democritus. Written in the spirit of the likes of Richard Feynman, Carl Sagan, and Douglas Hofstadter, and touching on some of the most fundamental issues in science, the unification of computation and physics. kind of like a new kind of science was, without the bs. Plus Scott is a funny guy, so even if you only understand 5% (likely, given the deep topics), seems worth it. If you want to get a taste, try this paper: NP-complete Problems and Physical Reality

2017-07-09: Multi-colored photons

the technology developed is readily extendable to create 2-quDit systems with more than 9000 dimensions (corresponding to 12 qubits and beyond, comparable to the state of the art in significantly more expensive/complex platforms).

2018-10-09: Quantum Verification. How do you know whether a quantum computer has done anything quantum at all?

After 8 years of graduate school, Mahadev has succeeded. She has come up with an interactive protocol by which users with no quantum powers of their own can nevertheless employ cryptography to put a harness on a quantum computer and drive it wherever they want, with the certainty that the quantum computer is following their orders. Mahadev’s approach gives the user “leverage that the computer just can’t shake off.” For a graduate student to achieve such a result as a solo effort is “pretty astounding”. Quantum computation researchers are excited not just about what Mahadev’s protocol achieves, but also about the radically new approach she has brought to bear on the problem. Using classical cryptography in the quantum realm is a “truly novel idea. I expect many more results to continue building on these ideas.”

2019-01-19: Spacetime QEC

space-time achieves its “intrinsic robustness,” despite being woven out of fragile quantum stuff. “We’re not walking on eggshells to make sure we don’t make the geometry fall apart. I think this connection with quantum error correction is the deepest explanation we have for why that’s the case.”

2019-04-19: Quantum Diff Privacy. On connections between differential privacy and quantum computing

2019-06-18: Neven’s Law

That rapid improvement has led to what’s being called “Neven’s law,” a new kind of rule to describe how quickly quantum computers are gaining on classical ones. Quantum computers are gaining computational power relative to classical ones at a “doubly exponential” rate — a staggeringly fast clip. With double exponential growth, “it looks like nothing is happening, nothing is happening, and then whoops, suddenly you’re in a different world.”

This is certainly the most extreme of the nerd rapture curves i have seen:

the very near future should be the watershed moment, where quantum computers surpass conventional computers and never look back. Moore’s Law cannot catch up. A year later, it outperforms all computers on Earth combined. Double qubits again the following year, and it outperforms the universe.

2019-06-23: Diamonds and Ion Qubits

In the mid-2000s there was a small diamond mined from the Ural Mountains. Is was called the ‘magic Russian sample. The diamond was extremely pure—almost all carbon, which isn’t common but with a few impurities that gave it strange quantum mechanical properties. Now anyone can go online and buy a $500 quantum-grade diamond for an experiment. The diamonds have nitrogen impurities—but what Schloss’s group needs is a hole right next to it, called a nitrogen vacancy. Russian “magic diamonds” can hold qubits in place and thus act the same way that a trapped-ion rig does. They replace a single carbon atom in a diamond’s atomic lattice with a nitrogen atom and leaving a neighboring lattice node empty, engineers can create what’s called a nitrogen-vacancy (NV) center. This is generally inexpensive since it’s derived from nature.

2019-07-04: John Wright joins UT Austin

With no evaluative judgment attached, this is an unprecedented time for quantum computing as a field. Where once faculty applicants struggled to make a case for quantum computing (physics departments: “but isn’t this really CS?” / CS departments: “isn’t it really physics?” / everyone: “couldn’t this whole QC thing, like, all blow over in a year?”), today departments are vying with each other and with industry players and startups to recruit talented people. In such an environment, we’re fortunate to be doing as well as we are. We hope to continue to expand.

2019-07-26: Quantum hardware should make monte carlo methods more powerful & accurate.

2019-08-20: 1 Million Qubits

Fujitsu has a Digital Annealer with 8192 Qubits and a 1M qubit system in the lab. Digital Annealer is a new technology that is used to solve large-scale combinatorial optimization problems instantly. Digital Annealer uses a digital circuit design inspired by quantum phenomena and can solve problems which are difficult and time consuming for classical computers.

2019-11-12: Topological Quantum Computer

Microsoft is developing Majorana-based topological quantum computer qubits which will be higher-quality and lower error rate qubits. A high-quality hybrid system made of InSb nanowires with epitaxial-grown Al shells has revealed ballistic superconductivity and quantized zero-bias conductance peak. This holds great promise for making the long-sought topological quantum qubits.

2019-12-13: 10000x Faster Quantum Simulations

they have made the simulation of the quantum electrons so fast that it could run extremely long without restrictions and the effect of their motion on the movement of the slow ions would be visible

2020-01-14: MIP*=RE

easily one of the biggest complexity-theoretic surprises so far in this century

2020-05-07: Room Temperature QC

Transparent crystals with optical nonlinearities could enable quantum computing at room temperature by 2030

2020-12-03: BosonSampling. A second method achieves quantum supremacy.

Do you have any amusing stories? When I refereed the Science paper, I asked why the authors directly verified the results of their experiment only for up to 26-30 photons, relying on plausible extrapolations beyond that. While directly verifying the results of n-photon BosonSampling takes ~2n time for any known classical algorithm, I said, surely it should be possible with existing computers to go up to n=40 or n=50? A couple weeks later, the authors responded, saying that they’d now verified their results up to n=40, but it burned $400000 worth of supercomputer time so they decided to stop there. This was by far the most expensive referee report I ever wrote!

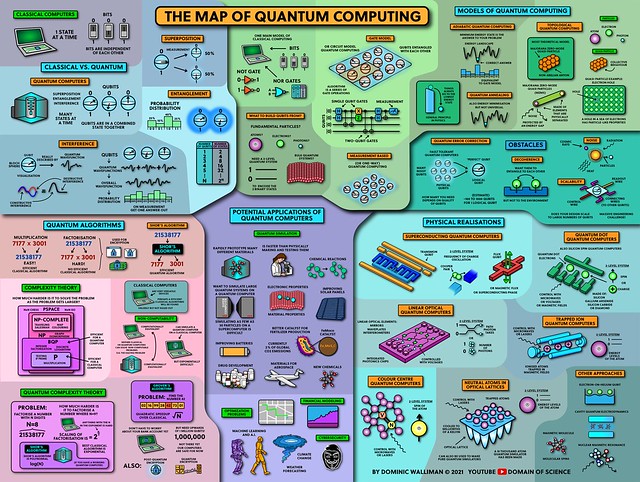

2021-12-06: Quantum Computing Overview. A really good overview of the field of quantum computing with a clear explanation of how they work, why people are excited about quantum algorithms and their value, the potential applications of quantum computers including quantum simulation, artificial intelligence and more, and the different models and physical implementations people are using to build quantum computers like superconducting devices, quantum dots, trapped ions, photons or neutral atoms, and the challenges they face.

2023-02-23: Quantum Error Correction breakthrough

Here we report the measurement of logical qubit performance scaling across several code sizes, and demonstrate that our system of superconducting qubits has sufficient performance to overcome the additional errors from increasing qubit number. We find that our distance-5 surface code logical qubit modestly outperforms an ensemble of distance-3 logical qubits on average, in terms of both logical error probability over 25 cycles and logical error per cycle ((2.914 ± 0.016)% compared to (3.028 ± 0.023)%). To investigate damaging, low-probability error sources, we run a distance-25 repetition code and observe a 1.7 × 10−6 logical error per cycle floor set by a single high-energy event (1.6 × 10−7 excluding this event). We accurately model our experiment, extracting error budgets that highlight the biggest challenges for future systems. These results mark an experimental demonstration in which quantum error correction begins to improve performance with increasing qubit number, illuminating the path to reaching the logical error rates required for computation.

2023-06-19: It might be possible to work around noise, making quantum computing practical.

IBM physicist Abhinav Kandala conducted precise measurements of the noise in each of their qubits, which can follow relatively predictable patterns determined by their position inside the device, microscopic imperfections in their fabrication and other factors. Using this knowledge, the researchers extrapolated back to what their measurements — in this case, of the full state of magnetization of a 2D solid — would look like in the absence of noise. They were then able to run calculations involving all of Eagle’s 127 qubits and up to 60 processing steps — more than any other reported quantum-computing experiment. The results validate IBM’s short-term strategy, which aims to provide useful computing by mitigating, as opposed to correcting, errors. Over the longer term, IBM and most other companies hope to shift towards quantum error correction, a technique that will require large numbers of additional qubits for each data qubit.

Algorithmic beauty

- Judy rarely compromises speed/space performance for simplicity (Judy will never be called simple except at the API).

- Judy is designed to avoid cache-line fills wherever possible. (This is the main design criteria for Judy.)

it is rare that you get in touch with basic algorithms as a software engineer these days. lo and behold, there is still scope for fundamental improvements to very basic algorithms, such as trees. ah, such beauty. if i only had the mental staying power to fully appreciate it 🙂

BNF

As i’m learning lots about static analysis, i’m re-discovering some classic computer science papers, such as the one (1977) by john backus (of BNF and FORTRAN fame):

Conventional programming languages are growing ever more enormous, but not stronger. Inherent defects at the most basic level cause them to be both fat and weak: their primitive word-at-a-time style of programming inherited from their common ancestor–the von Neumann computer, their close coupling of semantics to state transitions, their division of programming into a world of expressions and a world of statements, their inability to effectively use powerful combining forms for building new programs from existing ones, and their lack of useful mathematical properties for reasoning about programs.

i can relate to his statement that Programming languages appear to be in trouble. each new language adds new “features”, yet little changes, and we are still thinking in terms of state machines, very low-level indeed.

further down, we learn that

The models of computing systems that underlie programming languages fall into 3 classes: (a) simple operational models (e.g., Turing machines), (b) applicative models (e.g., the lambda calculus), and (c) von Neumann models (e.g., conventional computers and programming languages). Each class of models has an important difficulty: The programs of class (a) are inscrutable; class (b) models cannot save information from one program to the next; class (c) models have unusable foundations and programs that are conceptually unhelpful.

the main argument is that since traditional languages model the behavior of hardware, they are bound by its limitations:

Thus variables = storage cells; assignment statements = fetching, storing, and arithmetic; control statements = jump and test instructions.

i always hated functional languages when we studied them (they seemed less useful for interfacing with APIs, which is what most programming these days is about), but maybe i should reconsider.